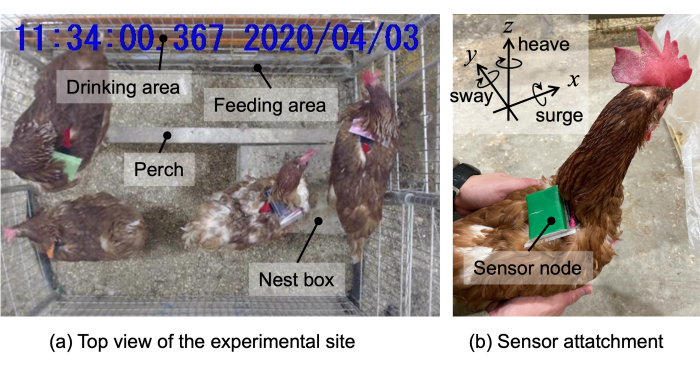

Hen’s Behavior recognition using inertial sensor towards animal welfare (2019.4-)

Recently, animal welfare has gained worldwide attention. The concept of animal welfare encompasses the physical and mental well-being of animals. Rearing layers in conventional cages may violate their instinctive behaviors and health, resulting in increased animal welfare concerns. Therefore, welfare-oriented rearing systems have been explored to improve their welfare while maintaining productivity. We explore a behavior recognition system using a wearable inertial sensor to improve the rearing system based on continuous monitoring and quantifying behaviors. Supervised machine learning recognizes a variety of 12 hen behaviors where various parameters in the processing pipeline are considered, including the classifier, sampling frequency, window length, data imbalance handling, and sensor modality.

- Tsuyoshi Shimmura, Itsufumi Sato, Ryo Takuno, and Kaori Fujinami, “Spatiotemporal Understanding of Behaviors of Laying Hens Using Wearable Inertial Sensors,” Poultry Science, Vol. 102, Issue 12, 2024. [link]

- Kaori Fujinami, Ryo Takuno, Itsufumi Sato, and Tsuyoshi Shimmura, “Evaluating Behavior Recognition Pipeline of Laying Hens Using Wearable Inertial Sensors”, Sensors 2023, Vol. 23, No. 11, Article No. 5077, 2023. [link]

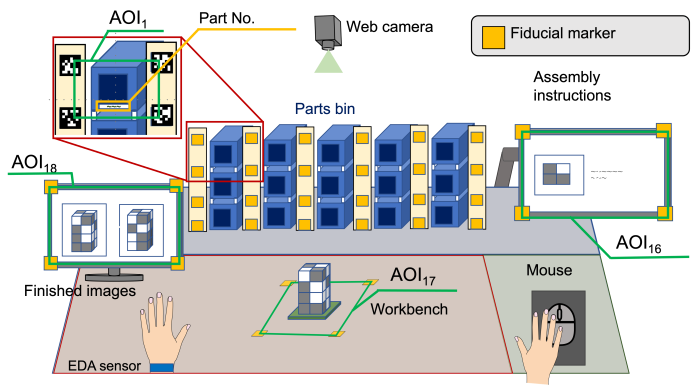

Confusion recognition during assembly task (2021.4-)

In recent years, products have tended to be customised, and the skills of workers have had a significant impact on production efficiency and product quality. Even unskilled workers should be able to work easily with the support of systems that effectively communicate information such as procedures and precautions. In order to realise such a support system, we investigate methods for detecting states of confusion during an assembly task. A multimodal approach is taken, using eye gaze, bio-signals such as electrodermal activity and heart rate, hand movements, etc. as cues to characterise mental states.

In recent years, products have tended to be customised, and the skills of workers have had a significant impact on production efficiency and product quality. Even unskilled workers should be able to work easily with the support of systems that effectively communicate information such as procedures and precautions. In order to realise such a support system, we investigate methods for detecting states of confusion during an assembly task. A multimodal approach is taken, using eye gaze, bio-signals such as electrodermal activity and heart rate, hand movements, etc. as cues to characterise mental states.

- Kaori Fujinami and Tensei Muragi, “Recognizing Confusion in Assembly Work based on a Hidden Markov Model of Gaze Transition”, In Proc. the 2022 IEEE 11th Global Conference on Consumer Electronics (GCCE 2022), October 2022.

- Akinobu Watanabe, Tensei Muragi, Airi Tsuji, and Kaori Fujinami, “Recognition of the States of Confusion during Assembly Work based on Electrodermal Activity”, To appear in the 2022 IEEE 11th Global Conference on Consumer Electronics (GCCE 2022), October 2022.

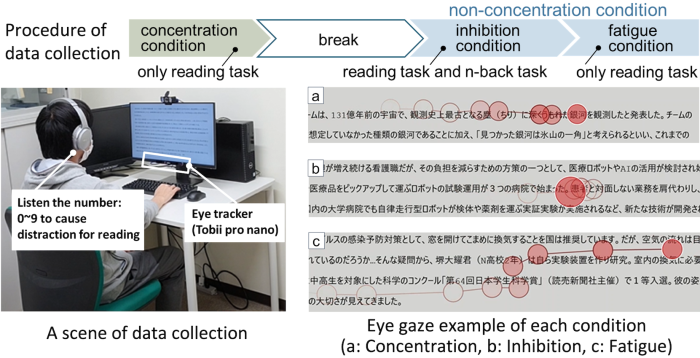

Eye Gaze-based concentration recognitioN (2021.4-)

Eye gaze reflects the mental states of human. In this study, we aim to detect the states of concentration (and other non-concentration states) using screen-based eye tracker during silent reading a text for increasing the productivity of work. The data collected in three conditions, i.e., normal reading (as concentration), reading with n-back task (as inhibition), and normal reading after the inhibition condition (as fatigue). 69 features were defined, representing the difference in the scan paths in these conditions.

- Saki Tanaka, Airi Tsuji, and Kaori Fujinami, “Eye-Tracking for Estimation of Concentrating on Reading Texts,” International Journal of Activity and Behavior Computing, Vol. 2024, Issue 1, Article 10, May 2024. (link) (Journal publication of ABC2023 paper with some correction of results)

- Saki Tanaka, Airi Tsuji, and Kaori Fujinami, “Eye-Tracking for Estimation of Concentrating on Reading Texts”, In Proc. of the 5th International Conference on Activity and Behavior Computing (ABC2023), 8 September 2023.

- Saki Tanaka, Airi Tsuji, and Kaori Fujinami, “Poster: A Preliminary Investigation on Eye Gaze-based Concentration Recognition during Silent Reading of Text”, In Proc. of the 2022 ACM Symposium on Eye Tracking Research & Applications (ETRA’22), June 8-11, 2022. (paper)(video)

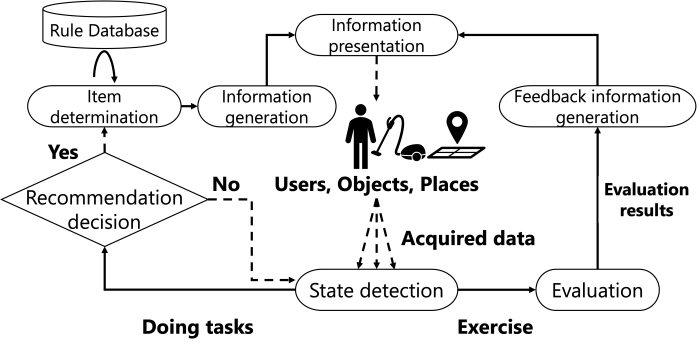

Context-aware exercise recommendation during other tasks (2020.4-)

Exercise has a positive effect not only on the body but also on the mental state; however, a lot of people in the world do not get enough exercise, and this trend is continuing. One of the reasons why people find it difficult to increase their physical activity is that they are too busy with work and household chores. To address this issue, it is important to integrate exercise into daily life, and a system that facilitates exercise while performing other tasks has been investigated, which we call “context-aware exercise recommendation” in that the user’s current activity and location are considered contextual information that can be used to filter out only feasible and acceptable exercises for the user.

Exercise has a positive effect not only on the body but also on the mental state; however, a lot of people in the world do not get enough exercise, and this trend is continuing. One of the reasons why people find it difficult to increase their physical activity is that they are too busy with work and household chores. To address this issue, it is important to integrate exercise into daily life, and a system that facilitates exercise while performing other tasks has been investigated, which we call “context-aware exercise recommendation” in that the user’s current activity and location are considered contextual information that can be used to filter out only feasible and acceptable exercises for the user.

- Mizuki Kobayashi and Kaori Fujinami, “An Exercise Recommendation System While Performing Daily Activities Based on Contextual Information”, In Proceedings of the 2023 IARIA Annual Congress on Frontiers in Science, Technology, Services, and Applications (IARIA Congress 2023), pp. 188-195, 13-17 November 2023. [link][slides]

- Mizuki Kobayashi, Airi Tsuji, and Kaori Fujinami, “A Context-Aware Exercise Facilitation System While Doing Other Tasks”, In the 2022 IEEE 11th Global Conference on Consumer Electronics (GCCE 2022), October 2022.

- Mizuki Kobayashi, Airi Tsuji, and Kaori Fujinami, “An Exercise Promoting System for Exercising while Doing Desk Work”, In: Streitz, N.A., Konomi, S. (eds) Distributed, Ambient and Pervasive Interactions. Smart Living, Learning, Well-being and Health, Art and Creativity. HCII 2022. Lecture Notes in Computer Science, vol 13326. Springer, Cham. (online)

Projection-based public displays that attract people with peripheral vision (2018.4-2021.3)

A presentation position determination method was investigated that improves the noticeability of information presentation in floor and wall projection public displays. Two types of information presentation methods: fixed position presentation and moving presentation, and four parameters: distance to the person, distance from the gazing point, difference in position, and direction of movement from other moving information, were considered and evaluated in this study. The experimental results showed that the method using the distance to the person was the most effective in both presentation methods.

A presentation position determination method was investigated that improves the noticeability of information presentation in floor and wall projection public displays. Two types of information presentation methods: fixed position presentation and moving presentation, and four parameters: distance to the person, distance from the gazing point, difference in position, and direction of movement from other moving information, were considered and evaluated in this study. The experimental results showed that the method using the distance to the person was the most effective in both presentation methods.

- Chihiro Hantani, Airi Tsuji, and Kaori Fujinami, “A Study on Projection-Based Public Displays that Attract People with Peripheral Vision”, In: Streitz, N.A., Konomi, S. (eds) Distributed, Ambient and Pervasive Interactions. Smart Environments, Ecosystems, and Cities. HCII 2022. Lecture Notes in Computer Science, vol 13325. Springer, Cham. (online)

Persuasive ambient display with a mechanism of attracts users to its contents (2020.4-2022.3)

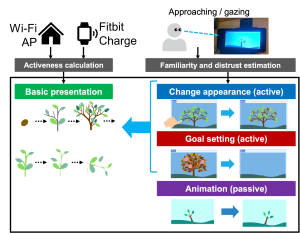

A number of work on persuasive ambient displays exist, which provide people with information in abstract forms such as illustrations, graphics, and shape-changes to motivate the user to change for desirable behaviors while being fit into the surrounding environment. Ambient displays can convey information without interfering with other tasks in daily life and help users to receive information positively by devising the way of expressing information. The novelty of the ambient display may attract users in the beginning; however, they may gradually lose interest and stop paying attention to the display when they use it for a long time due to the characteristic of presenting information in a calm manner, which suggests that a reduction in the effectiveness of persuasion is caused. We investigate three methods to avoid losing interest in the contents of the display based on a model of the process of losing interest over a long period of time: active involvement by customizing the appearance and the goal at the beginning and passive involvement to attracting gaze by animation.

A number of work on persuasive ambient displays exist, which provide people with information in abstract forms such as illustrations, graphics, and shape-changes to motivate the user to change for desirable behaviors while being fit into the surrounding environment. Ambient displays can convey information without interfering with other tasks in daily life and help users to receive information positively by devising the way of expressing information. The novelty of the ambient display may attract users in the beginning; however, they may gradually lose interest and stop paying attention to the display when they use it for a long time due to the characteristic of presenting information in a calm manner, which suggests that a reduction in the effectiveness of persuasion is caused. We investigate three methods to avoid losing interest in the contents of the display based on a model of the process of losing interest over a long period of time: active involvement by customizing the appearance and the goal at the beginning and passive involvement to attracting gaze by animation.

- (Best paper award) Shiori Kunikata and Kaori Fujinami, “Customizability in Preventing Loss of Interest in Ambient Displays for Behavior Change”, In Proceedings of the 12th International Conference on Ambient Computing, Applications, Services and Technologies (AMBIENT 2022), November 2022.

- Shiori Kunikata, Airi Tsuji, and Kaori Fujinami; “Involvement of a System to Keep Users Interested in the Contents of Ambient Persuasive Display”, In Proceedings of the 2021 IEEE 10th Global Conference on Consumer Electronics (GCCE 2021), 12 October 2021.

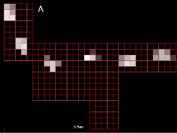

Software Structure Exploration in VR/MR environment (2019.4-2022.3)

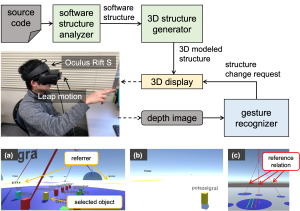

In recent years, the dependency of our society on software has been increasing; however, it is very difficult for developers to understand the complex structure and dependencies in a large amount of code. An approach to solve this issue is to represent the structure of the software. For example, the Unified Modeling Language (UML) uses a graphical notation to describe software visually. Visualizing the software structure in a three dimensional (3D) form, e.g., with urban metaphors, is expected to provide more information than in 2D form.

In recent years, the dependency of our society on software has been increasing; however, it is very difficult for developers to understand the complex structure and dependencies in a large amount of code. An approach to solve this issue is to represent the structure of the software. For example, the Unified Modeling Language (UML) uses a graphical notation to describe software visually. Visualizing the software structure in a three dimensional (3D) form, e.g., with urban metaphors, is expected to provide more information than in 2D form.

Additionally, virtual reality (VR) and mixed reality (MR) are often utilized as an environment to visualize and manipulate 3D software structures. We investigate an interaction method suitable for structure exploration in VR and MR environments.

- Tatsushi Aoki, Airi Tsuji, and Kaori Fujinami; “Software Structure Exploration in an Immersive Virtual Environment”, In Proceedings of the 2021 IEEE 10th Global Conference on Consumer Electronics (GCCE 2021), 15 October 2021.

Behavior Recognition of the Asian Black Bears using an AccelerometeR (2019.12-)

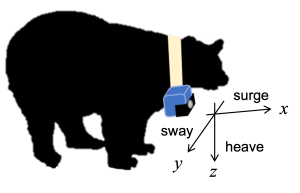

The miniaturizations of sensing units, the increase in storage capacity, and the longevity of batteries, as well as the advancement of big-data processing technologies, are making it possible to recognize animal behaviors. This allows researchers to understand animal space use patterns, social interactions, habitats, etc. Behavior recognition of Asian black bears (Ursus thibetanus ) was performed using a three-axis accelerometer embedded in collars attached to their necks. A machine learning was used to recognize seven bear behaviors, where oversampling and extension of labels to the period adjacent to the labeled period were applied to overcome data imbalance across classes and insufficient data in some classes. Experimental results showed the effectiveness of oversampling and a large difference in individual bears. Effective feature sets vary by experimental conditions.

) was performed using a three-axis accelerometer embedded in collars attached to their necks. A machine learning was used to recognize seven bear behaviors, where oversampling and extension of labels to the period adjacent to the labeled period were applied to overcome data imbalance across classes and insufficient data in some classes. Experimental results showed the effectiveness of oversampling and a large difference in individual bears. Effective feature sets vary by experimental conditions.

- Kaori Fujinami, Tomoko Naganuma, Yushin Shinoda, Koji Yamazaki, and Shinsuke Koike; “Attempts toward Behavior Recognition of the Asian Black Bears using an Accelerometer”, In Proceedings of the 3rd International Conference on Activity and Behavior Computing (ABC’21), October 2021.

Sensor-augmented Belt for Abdominal Shape MeasuremenT (2018.4-2021.3)

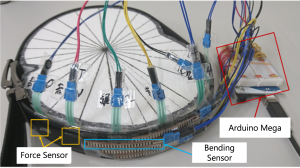

The objective of this study is to measure the shape of the abdominal circumference in a reference situation using a belt-type wearable device that can be worn daily. A prototype device consisting of seven bending sensors and seven force sensors on the belt was implemented. The bending sensors are used to estimate the belt shape by measuring the radius of curvature of the belt. The shape of the abdominal circumference was estimated by measuring the radius of curvature of the belt with a bending sensor. Also, by measuring the force applied to the belt with a force sensor, the abdominal circumference shape was observed to changewith the tightening of the belt and, hence, the abdominal circumference without the belt was estimated.

The objective of this study is to measure the shape of the abdominal circumference in a reference situation using a belt-type wearable device that can be worn daily. A prototype device consisting of seven bending sensors and seven force sensors on the belt was implemented. The bending sensors are used to estimate the belt shape by measuring the radius of curvature of the belt. The shape of the abdominal circumference was estimated by measuring the radius of curvature of the belt with a bending sensor. Also, by measuring the force applied to the belt with a force sensor, the abdominal circumference shape was observed to changewith the tightening of the belt and, hence, the abdominal circumference without the belt was estimated.

- Yukihiro Oishi, Airi Tsuji, and Kaori Fujinami; “A Sensor-augmented Belt toward Abdominal Shape Measurement System”, Sensors and Materials, Vol. 33, No. 12(1), pp. 4113-4133, December 2021. (link)

Hand-written Character Recognition based on acoustic gesture signatures (2017.4-2020.3)

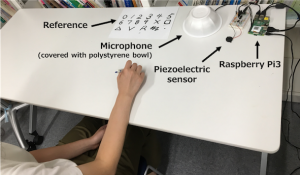

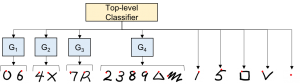

A gestural input to control artifacts and access the digital world is an essential part of highly usable systems. We proposed a gesture recognition method that leverages the sound generated by the friction between a surface such as a table and a finger or pen, in which 17 different gestures are defined. A hierarchical classifier structure is employed to increase the accuracy of confusable classes. Offline experiments showed that the highest accuracy is 0.954 under a condition where the classifiers are customized for each user, while an accuracy of 0.854 was obtained under a condition where the classifiers were trained without using the data from test users. We also confirmed the effectiveness of the hierarchical classifier approach compared with a single-flat-classifier approach and that of a feature engineering approach compared with a feature learning approach, i.e., Convolutional Neural Networks (CNN).

A gestural input to control artifacts and access the digital world is an essential part of highly usable systems. We proposed a gesture recognition method that leverages the sound generated by the friction between a surface such as a table and a finger or pen, in which 17 different gestures are defined. A hierarchical classifier structure is employed to increase the accuracy of confusable classes. Offline experiments showed that the highest accuracy is 0.954 under a condition where the classifiers are customized for each user, while an accuracy of 0.854 was obtained under a condition where the classifiers were trained without using the data from test users. We also confirmed the effectiveness of the hierarchical classifier approach compared with a single-flat-classifier approach and that of a feature engineering approach compared with a feature learning approach, i.e., Convolutional Neural Networks (CNN).

- Miki Kawato and Kaori Fujinami; “Acoustic-sensing-based Gesture Recognition Using Hierarchical Classifier”, Sensors and Materials, Vol. 32, No. 9, pp. 2981-2998. [link]

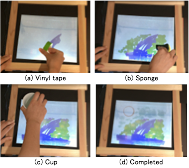

Hand occlusion management method

for tabletop work support systems using a projector (2018.4-2021.3)

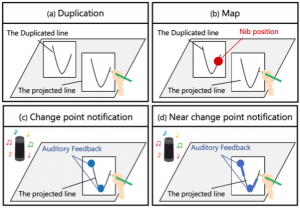

Work support systems using projectors realize greater work accuracy than systems that use head-mounted displays because such system can directly add digital information to an existing object. However, projection information may be hidden by the user’s hand (hand occlusion) and content may be difficult to recognize. Thus, we propose methods to manage hand occlusion that occurs when tracing a line, with a focus on visual and auditory feedback.

Work support systems using projectors realize greater work accuracy than systems that use head-mounted displays because such system can directly add digital information to an existing object. However, projection information may be hidden by the user’s hand (hand occlusion) and content may be difficult to recognize. Thus, we propose methods to manage hand occlusion that occurs when tracing a line, with a focus on visual and auditory feedback.

- Saki Shibayama and Kaori Fujinami; “A Comparison of Visual and Auditory Feedbacks towards Hand Occlusion Management in Tabletop Work Support Systems Using a Projector”, International Journal of Inspired Education, Science and Technology (IJIEST), Vol. 1, No. 2, pp. 35-42, 7 January 2020. (link)

- Saki Shibayama and Kaori Fujinami; “Hand-occlusion management for tabletop work support systems using a projector”, In Proceedings of the 2nd International Conference on New Computer Science Generation (NCSG), 13-14 March 2020.

Novelty detection in on-body device localization problem (2018.4-2021.3)

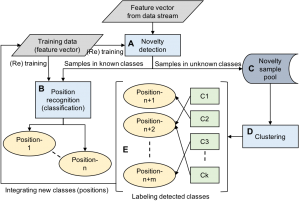

On-body device position-awareness plays an important role relative to providing smartphone-based services with high levels of usability and quality. Existing on-body device localization methods employ multi-class classification techniques, and the number of positions is fixed during use. However, data for training classifiers must be collected from all possible positions in advance, which requires significant effort. In addition, people do not always use all positions. Instead, people tend to have a few preferred storage positions. Therefore, we incrementally add recognition targets when a new carrying position is detected. In contrast, we propose a framework to discover new positions that the system does not initially support and add them as recognition targets during use, in which a novelty detection method is a core functionality.

- Mitsuaki Saito and Kaori Fujinami; “New Position Candidate Identification via Clustering towards Extensible On-Body Smartphone Localization System”, Sensors 2021, Vol. 21, No. 4, Article No. 1276, 2021. (link)

- Mitsuaki Saito and Kaori Fujinami; “Applicability of DBSCAN in Identifying the Candidates of New Positions in on-Body Smartphone Localization Problem”, In Proceedings of the 2020 IEEE 9th Global Conference on Consumer Electronics (GCCE 2020), pp. 393-396, October 2020. (link)

- Mitsuaki Saito and Kaori Fujinami; “New Class Candidate Generation applied to On-Body Smartphone Localization”, To be presented in the 2nd International Conference on Activity and Behavior Computing (ABC2020), 26-29 August 2020. (best paper nominee)

- Mitsuaki Saito and Kaori Fujinami; “Unknown On-Body Device Position Detection Based on Ensemble Novelty Detection”, Sensors and Materials, Vol. 32, No. 1, pp. 27-40, 2020. (link to publisher)

- Mitsuaki Saito and Kaori Fujinami; “Evaluation of Novelty Detection Methods in On-Body Smartphone Localization Problem”, In Proceedings of the 2019 IEEE 8th Global Conference on Consumer Electronics (GCCE 2019), pp. 470-474, 16 October, 2019. (IEEE Xplore)

- Mitsuaki Saito and Kaori Fujinami; “Extensible On-Body Smartphone Localization: A Project Overview and Preliminary Experiment”, In Proceedings of the 17th International Conference on Pervasive Intelligence and Computing (PICom 2019), pp. 883-884, 6 August, 2019. (IEEE Xplore)

Personalizing context recognition model using active learning accelerated by compatibility-based base classifier selection (2017.4-)

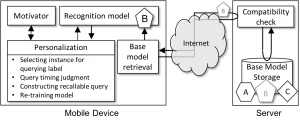

In context recognition, the recognition model is often built by supervised machine-learning techniques. The recognition performance is likely to be influenced by individual difference, e.g., rhythm of walking, in the target domain. We investigate a framework that allows mobile device users to be involved with customization process, in which the key concept is active learning and persuasive technologies. We show a case study with smartphone localization problem, in which we evaluated the effectiveness of active-learning supported by compatibility-based base classifier selection.

In context recognition, the recognition model is often built by supervised machine-learning techniques. The recognition performance is likely to be influenced by individual difference, e.g., rhythm of walking, in the target domain. We investigate a framework that allows mobile device users to be involved with customization process, in which the key concept is active learning and persuasive technologies. We show a case study with smartphone localization problem, in which we evaluated the effectiveness of active-learning supported by compatibility-based base classifier selection.

- (Best poster award) Kaori Fujinami, Trang Thuy Vu, and Koji Sato; “A Framework for Human-centric Personalization of Context Recognition Models on Mobile Devices”, In Proceedings of the 17th International Conference on Pervasive Intelligence and Computing (PICom 2019),pp. 885-888, 6 August, 2019. (IEEE Xplore)

- Kaori Fujinami; “Personalizing Context Recognition Model based on Active Learning: A Project Overview”, The 2nd International Workshop on Smart Sensing System (IWSSS’17), August 8 2017.

- Koji Sato and Kaori Fujinami; “Active Learning-based Classifier Personalization: A Case of On-body Device Localization”, In Proc. of the 2017 IEEE 6th Global Conference on Consume Electronics (GCCE2017), pp. 291-292, October 2017. (poster session)

Personalizing activity recognition model based on compatibility of classifiers (2018.4-2021.3)

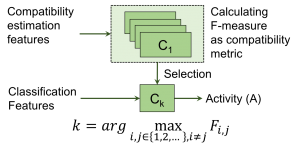

Personalization related research has been conducted for decades with the objective of investigating personal-level effectiveness in irregular or abnormal cases among many types of domains. However, the activity recognition community generally investigates one-fits-all model, i.e., a single recognition model for all people and suffers from poor performance generalization or a huge amount of training data for high generalization. We propose Compatibility-based classifier personalization (CbCP) as a subject-dependent activity recognition method that uses the classifier with the highest compatibility (similarity) with a particular user.

Personalization related research has been conducted for decades with the objective of investigating personal-level effectiveness in irregular or abnormal cases among many types of domains. However, the activity recognition community generally investigates one-fits-all model, i.e., a single recognition model for all people and suffers from poor performance generalization or a huge amount of training data for high generalization. We propose Compatibility-based classifier personalization (CbCP) as a subject-dependent activity recognition method that uses the classifier with the highest compatibility (similarity) with a particular user.

- Trang Thuy Vu; “Human Activity Recognition Employing Compatibility-based Classifier Personalization”, Ph. D Thesis, Tokyo University of Agriculture and Technology, March 2021.

- Trang Thuy Vu and Kaori Fujinami; “Personalizing Activity Recognition Models by Selecting Compatible Classifiers with a Little Help from the User”, Sensors and Materials, Vol. 32, No. 9, pp. 2999-3017, 2020. [link]

- Trang Thuy Vu and Kaori Fujinami; “Examining Hierarchy and Granularity of Classifiers in Compatibility-based Classifier Personalization”, In Proceedings of the 2019 IEEE 8th Global Conference on Consumer Electronics (GCCE 2019), pp. 553-554, 16 October, 2019.

- Trang Thuy Vu and Kaori Fujinami; “Understanding Compatibility-based Classifier Personalization in Activity Recognition”, In Proceedings of the 1st International Conference on Activity and Behavior Computing (ABC2019), pp. 97-102, May 2019.

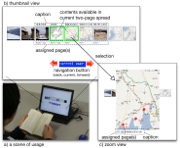

CrosSI: A Desktop Environment with Connected Horizontal and Vertical Surfaces (2017.4-2020.3)

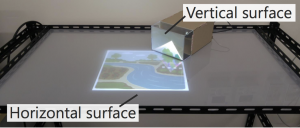

A typical desk workspace includes both horizontal and vertical areas, wherein users can freely move items between the horizontal and vertical surfaces and edit objects; for example, a user can place a paper on the desk, write a memo, and affix it to the wall. However, in workspaces designed to handle digital information, the usable area is limited to specific devices such as displays and tablets, and the user interface is discontinuous in terms of both the software and hardware. Therefore, we propose a multi-surface information display system CrosSI, which includes a horizontal surface and multiple cubic structures with vertical surfaces on the horizontal surface. Using this system, we investigate the ability to visualize and move information on a continuous system of horizontal and vertical surfaces.

A typical desk workspace includes both horizontal and vertical areas, wherein users can freely move items between the horizontal and vertical surfaces and edit objects; for example, a user can place a paper on the desk, write a memo, and affix it to the wall. However, in workspaces designed to handle digital information, the usable area is limited to specific devices such as displays and tablets, and the user interface is discontinuous in terms of both the software and hardware. Therefore, we propose a multi-surface information display system CrosSI, which includes a horizontal surface and multiple cubic structures with vertical surfaces on the horizontal surface. Using this system, we investigate the ability to visualize and move information on a continuous system of horizontal and vertical surfaces.

- Risa Otsuki and Kaori Fujinami; “Transferring information between connected horizontal and vertical interactive surfaces”, In Proceedings of the 2nd International Conference on New Computer Generation (NCSG), March 2020.

- Risa Otsuki and Kaori Fujinami; “CrosSI: A novel workspace with connected horizontal and vertical interactive surfaces”, In Proc. of the 2018 ACM International Conference on Interactive Surface and Spaces (ISS’18, formerly ITS), poster session, pp. 339-344, 25-28 November, 2018. (ACM-DL)

Placement-aware Mobile Phone (v1: 2007.10-2010.3, v2: 2010.4-2013.3, v3:2013.4-2016.3, V4: 2016.4-2018.3, V5: 2018.4-2021.3)

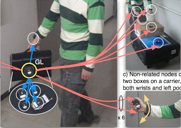

We are investigating a method to identify the location of a mobile phone on the user’s body, e.g. trousers front pocket, hanging on the neck. The identification algorithm utilizes only one 3-axes accelerometer which is getting popular in commercial mobile phones. The location information is utilized by an application on a mobile phone as a context of a user and the device itself. The application scenarios are 1) smart call/message notification, 2) assurance of sensor placement, and 3) location-aware functionality control. Popular six locations are specified as the targets, against which our identification algorithm works even when the location of a mobile phone changes while the user is in nonstationary motion, e.g., standing. This makes the above applications work seamlessly.

We are investigating a method to identify the location of a mobile phone on the user’s body, e.g. trousers front pocket, hanging on the neck. The identification algorithm utilizes only one 3-axes accelerometer which is getting popular in commercial mobile phones. The location information is utilized by an application on a mobile phone as a context of a user and the device itself. The application scenarios are 1) smart call/message notification, 2) assurance of sensor placement, and 3) location-aware functionality control. Popular six locations are specified as the targets, against which our identification algorithm works even when the location of a mobile phone changes while the user is in nonstationary motion, e.g., standing. This makes the above applications work seamlessly.

- Kaori Fujinami, Tsubasa Saeki, Yinchuan Li, Tsuyoshi Ishikawa, Takuya Jimbo, Daigo Nagase, and Koji Sato; “Fine-grained Accelerometer-based Smartphone Carrying States Recognition during Walking”, International Journal of Advanced Computer Science and Applications, Vol. 8, No. 8, pp. 447-456, September 2017. [link]

- Kaori Fujinami; “On-body Smartphone Localization with an Accelerometer”, Information, Vol. 7, No. 2, Article No.21, MDPI, March 2016.(manuscript)

- Kaori Fujinami and Satoshi Kouchi; “Recognizing a Mobile Phone’s Storing Position as a Context of a Device and a User”, In (post) Proc. of the 9th International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services (MobiQuitous2012), pp. 76-88, Beijing, China, 12 December 2012.

- Kaori Fujinami, Chunshan Jin, and Satoshi Kouchi; “Tracking On-body Location of a Mobile Phone”, Late Breaking Results – Cutting Edge Technologies on Wearable Computing, The 14th Annual IEEE International Symposium on Wearable Computers (ISWC’10), pp.190-197, October 2010.

- Kaori Fujinami and Chunshan Jin; “Sensing On-Body Location of a Mobile Phone by Motions in Storing and Carrying”, In Electronic Proc. of IEEE RO-MAN 2009 Workshop CASEbac 2009, September 2009.

- Kaori Fujinami; A Placement-aware Mobile Phone; The 1st Lancaster-Waseda Ph.D Workshop, September, 2009.

- Chunshan Jin and Kaori Fujinami; “Is My Mobile Phone in the Chest Pocket?: Knowing Phone’s Location on the Body”, Demonstrated at the 7th International Conference on Pervasive Computing, In Adj. Proc. of Pervasive2009, pp. 189-192, May 2009.

- Kaori Fujinami; Assuring Correct Installation of a Sensor Node Towards Reliable Sensing, Finnish-Japanese Workshop on Ubiquitous Computing and Urban Lives 2009, March 2009.

Investigating Psychological Effects on Positional Relationship between a person and a person-following robot (2017.4-2018.3)

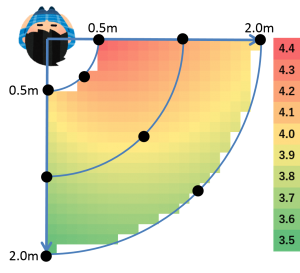

A person-following robot must understand the psy- chological effects of a user to maintain a good relationship with them. In this paper, we define factors that affect the user’s psychological states by factor analysis. Also, we present an overall score of the following effect for various following positions based on user evaluation. The result shows that a higher score is obtained if the robot follows close and is beside the person than that if the robot follows the person from a distance.

A person-following robot must understand the psy- chological effects of a user to maintain a good relationship with them. In this paper, we define factors that affect the user’s psychological states by factor analysis. Also, we present an overall score of the following effect for various following positions based on user evaluation. The result shows that a higher score is obtained if the robot follows close and is beside the person than that if the robot follows the person from a distance.

- Keita Maehara and Kaori Fujinami; “Investigating Psychological Effects on Positional Relationships Between a Person and a Human-Following Robot”, In Proc. of the 24th IEEE International Conference on Embedded and Real-Time Computing Systems and Applications (RTCSA2018), pp. 242-243, poster session, 29th August 2018.

Estimating Smartphone Addiction Proneness Scale through the State of Use of Terminal and Applications (2017.4-2019.3)

Overuse of smartphone applications causes addiction to smartphone, which affects the user’s physical and mental health. Interventions such as providing persuasive messages and controlling the use of applications need to assess the level of addiction to smartphone. To measure this addiction level, Smartphone Addiction Proneness scale (SAPS) has been proposed. However, it requires the user to answer 15 questions, which makes it burdensome, unreliable (because it is based on user response rather than their behavior), and slow (the estimation takes time). To overcome these limitations, we propose a technique for automatic recognition of SAPS score based on the actual daily use of the smartphone device. Our technique estimates the SAPS score using a regression model that takes the smartphone’s states of use as explanatory variables (features). We describe the effective features and the regression model.

- Satoru Minagawa and Kaori Fujinami; “Estimating Smartphone Addiction Proneness Scale through the State of Use of Terminal and Applications”, In Proc. of the 1st International Workshop on Computing for Well-Being (WellComp’18), pp. 726-729, 8th October 2018.

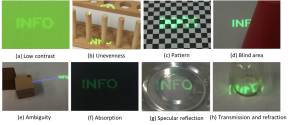

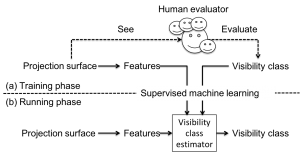

Visibility-aware label placement (VisLP) in spatial augmented reality (2015.4-2018.3)

Augmented Reality (AR) technologies enhances the physical world with digital information. In graphic-based augmentation, text and graphics, i.e., label, are associated to an object for describing it. Various view management techniques for AR have been investigated. Most of them have been designed for two dimensional see-through displays, and little have been investigated for projector-based AR called Spatial AR. We propose a view management method for spatial AR, VisLP, that finds visible position for a label and a linkage line, while taking into account the characteristics of projecting information on physical world. VisLP employs machine-learning techniques to classify visibility that reflects human’s subjective mental workload in reading information and objective measures of reading correctness.

- Keita Ichihashi and Kaori Fujinami; “Estimating Visibility of Annotations for View Management in Spatial Augmented Reality based on Machine-Learning Techniques”, Sensors 2019, 19 (4), 939. [link]

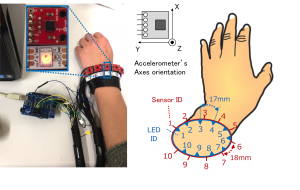

Visible Position Estimation in Whole Wrist Circumference Devices towards Pose-aware Display (2015.4-2018.3)

Smart watches allow instant access to information; however, the visual notification is not always reachable depending on the forearm posture. Flexible and curved display technologies can enable full-wrist circumference displays that show information at the most visible positions using pose awareness. A prototype device is implemented with 10 LEDs and 10 accelerometers around the wrist. The most visible LED is estimated using a machine learning technique. The main idea is to utilize direct relationship between the raw acceleration signals and the position of the most visible LED, rather than assigning the position by particular classes of activities or forward-kinematic model-based estimation. Also, sensor reduction is attempted by introducing new features.

Smart watches allow instant access to information; however, the visual notification is not always reachable depending on the forearm posture. Flexible and curved display technologies can enable full-wrist circumference displays that show information at the most visible positions using pose awareness. A prototype device is implemented with 10 LEDs and 10 accelerometers around the wrist. The most visible LED is estimated using a machine learning technique. The main idea is to utilize direct relationship between the raw acceleration signals and the position of the most visible LED, rather than assigning the position by particular classes of activities or forward-kinematic model-based estimation. Also, sensor reduction is attempted by introducing new features.

- Yuki Tanida and Kaori Fujinami; “Visible Position Estimation in Whole Wrist Circumference Device towards Forearm Pose-aware Display”, EAI Endorsed Transaction on Context-aware Systems and Applications, 18(13), e4, March 14, 2018. [link]

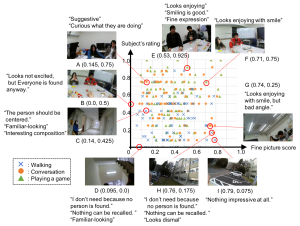

Impressive Picture Selection for Pleasurable Recall of Past Events (2015.4-2018.3)

Wearable cameras allow us to capture large amount of video or still images in an automatic and implicit manner. However, the only necessary images should be filtered out from the captured data that contains meaningless and/or redundant information. We proposed a method to identify a set of still images by audio and video data, which is intended to let users feel pleasurable when they watch the images later.

Wearable cameras allow us to capture large amount of video or still images in an automatic and implicit manner. However, the only necessary images should be filtered out from the captured data that contains meaningless and/or redundant information. We proposed a method to identify a set of still images by audio and video data, which is intended to let users feel pleasurable when they watch the images later.

- Eriko Kinoshita and Kaori Fujinami; “Impressive Picture Selection from Wearable Camera Toward Pleasurable Recall of Group Activities”, Universal Access in Human–Computer Interaction. Human and Technological Environments. UAHCI 2017. Lecture Notes in Computer Science, vol 10279. pp. 446-456, Springer, Cham., July 13 2017. (International Conference on Universal Access in Human-Computer Interaction)

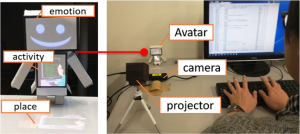

Palco: Printable Avatar System for Loose Communication between Distant People (2016.4-2019.3)

We propose a method for providing users with a sense of security and connectedness with others by facilitating loose communication. Loose communication is defined by the presentation of abstract information and passive (one-way) communication. By focusing on the physicality and anthropomorphic characteristics of tangible avatars, we investigated a communication support system, Palco, that displays three types of contextual information with respect to the communication partner—emotional state, activity, and location—in a loose manner. Our approach contrasts with typical SNS interaction methods characterized by tight communication with interactivity and concrete information.

We propose a method for providing users with a sense of security and connectedness with others by facilitating loose communication. Loose communication is defined by the presentation of abstract information and passive (one-way) communication. By focusing on the physicality and anthropomorphic characteristics of tangible avatars, we investigated a communication support system, Palco, that displays three types of contextual information with respect to the communication partner—emotional state, activity, and location—in a loose manner. Our approach contrasts with typical SNS interaction methods characterized by tight communication with interactivity and concrete information.

- Shin’ichi Endo and Kaori Fujinami, “Impacts of Handcrafting Tangible Communication Avatars on the Communication Partners and Creative Experiences”, EAI Endorsed Transactions on Creative Technologies, Vol. 9, No. 4, July 2023.

- Shin’ichi Endo and Kaori Fujinami; “Realizing Loose Communication with Tangible Avatar to Facilitate Recipient’s Imagination”, Information, Vol. 9, No. 2, Article 32, February 1, 2018. [link][YouTube (in Japanese)]

- (Excellent Demo! Award 3rd prize) Shinichi Endo and Kaori Fujinami; “Palco: Printable Avatar System that Realizes Loose Communication between People”, In Proc. of the 2017 IEEE 6th Global Conference on Consume Electronics (GCCE2017), pp. 671-672, October 2017. (demo! session)

UnicrePaint: Digital Painting through Physical Objects (2014.4-2017.3, 2018.4-2019.9)

Mankind’s capacity for creativity is infinite. In the physical world, people create visual artistic works not only with specific tools, such as paintbrushes, but also with various objects, such as dried flowers pressed on paper. In contrast, digital painting has a number of advantages; however, such painting currently requires a specific tool, such as a stylus, which might diminish the pleasurable experience of creation. In this project, a digital painting system called UnicrePaint is investigated, that utilizes daily objects as tools of expression. UnicrePaint is designed to capture the appearance of contact points on a drawing surface and provide real-time co-located digitized feedback. Contact detection is realized by FTIR-based sensing.

- Kaori Fujinami, Mami Kosaka, and Bipin Indurkhya; “Painting an Apple with an Apple: A Tangible Tabletop Interface for Painting with Physical Objects”, Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT), Vol. 2, Issue 4, Article No. 162, December 2018. (Invited for presentation in UbiComp’19) [ACM-DL][YouTube]

- Mami Kosaka and Kaori Fujinami; “UnicrePaint: Digital Painting through Physical Objects for Unique Creative Experiences”, In Proceedings of the 10th Anniversary Conference on Tangible Embedded And Embodied Interaction (TEI), pp. 475-481, February 2016 (Work-In-Progress session). (YouTube)

Spot and Set: Controlling Lighting Patterns of LEDs through a Smartphone (2014.4-2017.3)

People enjoy various social events, and illumination plays an important role in creating a good atmosphere during these events. Usually, people illuminate trees and walls by laying cables with a bunch of LEDs. However, they do not have control over the lighting patterns; instead, they just select a preset pattern. In this project, we propose a system to control the lighting patterns of LEDs. In our system, a user’s smartphone works as a controller. The user holds his/her smartphone with a backside light over a light sensor that corresponds to a particular LED. Once one or more LEDs are selected, the user sets the desired lighting pattern. We consider that this approach extends a user’s range of expression at little learning cost.

People enjoy various social events, and illumination plays an important role in creating a good atmosphere during these events. Usually, people illuminate trees and walls by laying cables with a bunch of LEDs. However, they do not have control over the lighting patterns; instead, they just select a preset pattern. In this project, we propose a system to control the lighting patterns of LEDs. In our system, a user’s smartphone works as a controller. The user holds his/her smartphone with a backside light over a light sensor that corresponds to a particular LED. Once one or more LEDs are selected, the user sets the desired lighting pattern. We consider that this approach extends a user’s range of expression at little learning cost.

- (Excellent Demo! Award, 2nd Prize) Daigo Nagase and Kaori Fujinami; “Spot and Set: Controlling Lighting Patterns of LEDs Through a Smartphone”, In Proc. of GCCE2015, October 2015.

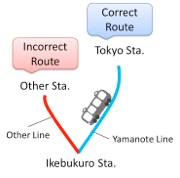

Mis-choice Detection of Public Transportation Route based on Smartphone and GIS (2014.4-2017.3)

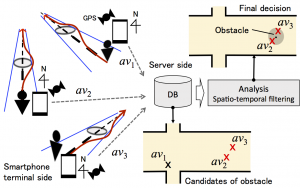

Public transportation systems in large cities are so complex. People who are not familiar with a certain city often make mistakes. Even though a number of route finder or transit support services have already been provided to avoid route mischoice, still people fail and become very anxious about the recovery. In this project, we propose a system to detect a mischoice of a public transportation route before arriving at the next station. The system runs on a smartphone in cooperation with Geographical Information System (GIS) on the network. Unlike other methods that aim at self-contained train localization based on a timetable, our method intends to handle delays and congested train services by an approach similar to map-mataching. The system is expected to support people to recover from a failure as early as possible.

Public transportation systems in large cities are so complex. People who are not familiar with a certain city often make mistakes. Even though a number of route finder or transit support services have already been provided to avoid route mischoice, still people fail and become very anxious about the recovery. In this project, we propose a system to detect a mischoice of a public transportation route before arriving at the next station. The system runs on a smartphone in cooperation with Geographical Information System (GIS) on the network. Unlike other methods that aim at self-contained train localization based on a timetable, our method intends to handle delays and congested train services by an approach similar to map-mataching. The system is expected to support people to recover from a failure as early as possible.

- Takuya Jimbo and Kaori Fujinami ;“Detecting Mischoice of Public Transportation Route based on Smartphone and GIS”, In Adjunt Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp’15), pp. 165-168, September. (poster)

Pedestrian’s Avoidance Behavior Recognition for Opportunistic Road Anomaly Detection (2014.4-2017.3)

Recognizing the avoidance behavior and aggregating the events with locations allows automatic anomaly reports. Automatic road anomaly detection techniques for cars and bikes have already been proposed, which deals with relatively large movement. By contrast, the pedestrian’s avoidance behavior is too slight to adapt the existing methods. In this project, an opportunistic sensing-based system for road anomaly detection is investigated. To detect road anomalies such as crack, pit and puddle, we focus on pedestrian’s avoidance behavior that is characterized by the azimuth changing patterns. Three typical avoidance behaviors are defined.

Recognizing the avoidance behavior and aggregating the events with locations allows automatic anomaly reports. Automatic road anomaly detection techniques for cars and bikes have already been proposed, which deals with relatively large movement. By contrast, the pedestrian’s avoidance behavior is too slight to adapt the existing methods. In this project, an opportunistic sensing-based system for road anomaly detection is investigated. To detect road anomalies such as crack, pit and puddle, we focus on pedestrian’s avoidance behavior that is characterized by the azimuth changing patterns. Three typical avoidance behaviors are defined.

- Tsuyoshi Ishikawa and Kaori Fujinami; “Smartphone-based Pedestrian’s Avoidance Behavior Recognition towards Opportunistic Road Anomaly Detection”, ISPRS International Journal of Geo-Information, Vol. 5, No. 10, Article 182, October 2016.

- Tsuyoshi Ishikawa and Kaori Fujinami; “Pedestrian’s Avoidance Behavior Recognition for Road Anomaly Detection in the City”, In Adjunct Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp’15), pp. 201-204, September. (poster)

Understanding where to project information on the desk to support work with paper and pen (2014.4-2015.3)

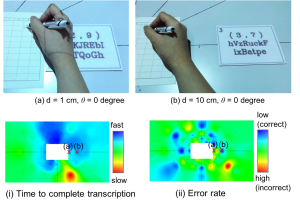

Paper-based work still remains in daily life, and digital information may help such paper-based work. In this project, we assume an environment, in which a projector presents information on the desk that is used in a transcription task such as filling a form of notification of change of address to post office. We explore the effect of the distance and the direction between the transcription target (printed) and the reference information (projected) on the efficiency and effectiveness. We confirmed that presentation on the upper sides of the transcription target and the area close to the target showed better results (faster task completion and lower error rate), while worse results were observed on the lower right side that was around the dominant hands.

Paper-based work still remains in daily life, and digital information may help such paper-based work. In this project, we assume an environment, in which a projector presents information on the desk that is used in a transcription task such as filling a form of notification of change of address to post office. We explore the effect of the distance and the direction between the transcription target (printed) and the reference information (projected) on the efficiency and effectiveness. We confirmed that presentation on the upper sides of the transcription target and the area close to the target showed better results (faster task completion and lower error rate), while worse results were observed on the lower right side that was around the dominant hands.

- Mai Tokiwa and Kaori Fujinami; “Understanding Where to Project Information on the Desk for Supporting Work with Paper and Pen”, Virtual, Augmented and Mixed Reality. VAMR 2017. Lecture Notes in Computer Science, vol 10280., pp. 72-81, Springer, Cham., July 13 2017. (International Conference on Virtual, Augmented and Mixed Reality (VRMR2017))

Avoiding texting while walking during crossing roads and railways (2013.4-2016.3)

In late years, a number of people walk carelessly while continuing watching a smartphone screen, which often causes fatal accidents. In this project, a system is investigated to restrain smartphone usage at the time of crossing road and railroad based on the combination of the present and past location of a pedestrian, walking direction and walking speed by means of smartphone-mounted sensors. A geographical database that includes roads and railway information is also combined.

In late years, a number of people walk carelessly while continuing watching a smartphone screen, which often causes fatal accidents. In this project, a system is investigated to restrain smartphone usage at the time of crossing road and railroad based on the combination of the present and past location of a pedestrian, walking direction and walking speed by means of smartphone-mounted sensors. A geographical database that includes roads and railway information is also combined.

- Tsubasa Saeki and Kaori Fujinami; “Smartphone Usage Restriction During Crossing Roads and Railways”, In Proceedings of the 1st International Workshop on Wearable Systems and Applications (WearSys’15), pp. 51-52, Florence, Italy, August 18 2015.

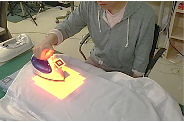

An Ironing Support System with Superimposed Guidance (2012.4-2015.3)

Ironing is one of the troublesome houseworks. A number of people do not like to iron. Based on a questionnaire survey with housewives, we found that the lack of skills and knowledge in beginners were the reason for dislikes. To lower the barrier, a system to support beginners of ironing shirts was investigated. The system recognizes the position of shirts on an ironing board and the user’s operational states. The position of wrinkles are recognized based on texture analysis of Infra-red camera images. Then, supportive information is presented by a video projector in a way that the information is projected on an appropriate position on the working surface.

Ironing is one of the troublesome houseworks. A number of people do not like to iron. Based on a questionnaire survey with housewives, we found that the lack of skills and knowledge in beginners were the reason for dislikes. To lower the barrier, a system to support beginners of ironing shirts was investigated. The system recognizes the position of shirts on an ironing board and the user’s operational states. The position of wrinkles are recognized based on texture analysis of Infra-red camera images. Then, supportive information is presented by a video projector in a way that the information is projected on an appropriate position on the working surface.

- Kimie Suzuki and Kaori Fujinami; “A Projector-Camera System for Ironing Support with Wrinkle Enhancement”, EAI Endorsed Transaction on Ambient Systems, 17(14), e2, August 2017. [link]

- Kimie Suzuki and Kaori Fujinami; “An Ironing Support System with Superimposed Information for Beginners”, In Proceedings of the 2nd IEEE International Workshop on Consumer Devices and Systems (CDS2014), Sweden, July 2014.

View Management Techniques for Tabletop Spatial Augmented Reality Systems (2012.4-2015.3)

Augmented reality (AR) by a projector allows easy association of information by using a label with a particular object. When a projector is installed above a workspace and pointed downward, supportive information can be presented; however, a presented label is deformed on a non-planar object. Also, a label might be projected in a region where it is hidden by the object. In this project, a view management technique is investigated that facilitates interpretation by improving the legibility of information. Our proposed method, the Nonoverlapped Gradient Descent (NGD) method, determines the position of a newly added label by avoiding overlapping of surrounding labels and linkage lines. The issue of presenting in a shadow area and a blind area is also addressed by estimating these areas based on the approximation of objects as a simple solid.

Augmented reality (AR) by a projector allows easy association of information by using a label with a particular object. When a projector is installed above a workspace and pointed downward, supportive information can be presented; however, a presented label is deformed on a non-planar object. Also, a label might be projected in a region where it is hidden by the object. In this project, a view management technique is investigated that facilitates interpretation by improving the legibility of information. Our proposed method, the Nonoverlapped Gradient Descent (NGD) method, determines the position of a newly added label by avoiding overlapping of surrounding labels and linkage lines. The issue of presenting in a shadow area and a blind area is also addressed by estimating these areas based on the approximation of objects as a simple solid.

- Makoto Sato and Kaori Fujinami; “Nonoverlapped View Management for Augmented Reality by Tabletop Projection”, Journal of Visual Language and Computing (the official DMS2015 proceedings, an extended version), Vol. 25, Issue 6, pp. 891-902, Elsevier, 2014.12. DOI: 10.1016/j.jvlc.2014.10.030

- Makoto Sato and Kaori Fujinami; “Nonoverlapped View Management for Augmented Reality by Tabletop Projection”, In Proceedings of the 20th International Conference on Distributed Multimedia Systems (DMS), pp. 250-259, Pittsburgh, USA August 27 2014.

Augmented Card Playing (2011.4-2014.3)

This project aims at investing a software toolkit for augmenting a traditional card playing with a projector and a camera to add playfulness and communication. The functionalities were specified based on a user survey session with actual play, such as card recognition, player identification, and visual effect. Cards are recognized by a video camera without any artificial marker with an accuracy of 96%. A player is identified by the same camera from the direction of the hand appearing over a table. The Pelmanism game was augmented on top of the system as a case study to validate the concept of augmentation and the performance of the recognitions.

This project aims at investing a software toolkit for augmenting a traditional card playing with a projector and a camera to add playfulness and communication. The functionalities were specified based on a user survey session with actual play, such as card recognition, player identification, and visual effect. Cards are recognized by a video camera without any artificial marker with an accuracy of 96%. A player is identified by the same camera from the direction of the hand appearing over a table. The Pelmanism game was augmented on top of the system as a case study to validate the concept of augmentation and the performance of the recognitions.

- Nozomu Tanaka and Kaori Fujinami; “A Projector-Camera System for Augmented Card Playing and a Case Study with the Pelmanism Game”, EAI Endorsed Transaction on Ambient Systems, 17(13): e6, 17 May 2017. [link]

- Nozomu Tanaka, Satoshi Murata and Kaori Fujinami; “A System Architecture for Augmenting Card Playing with a Projector and a Camera”, In Proceedings of the 2012 International Conference on Digital Contents and Applications (DCA 2012), pp. 193-201, South Korea, 17 December 2012.

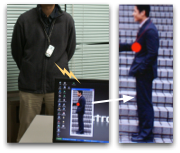

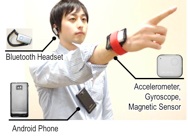

Pointing and Calling Recognition for Remote Operatives Management (2011.4-2014.3)

This project aims at investigating a new facilitated assessment technique for the pointing and calling enforcement. The pointing and calling is an activity for workers to keep occupational safety and correctness. Our proposed system provides early detection for the false enforcement of the pointing and calling by recognizing the performance of the activity. We implemented a prototype system on an Android smartphone terminal to record occurrence of the performance, the place where it performed, and the performed time. The recognition accuracy showed the feasibility of recognition with a self-contained wearable computer system.

This project aims at investigating a new facilitated assessment technique for the pointing and calling enforcement. The pointing and calling is an activity for workers to keep occupational safety and correctness. Our proposed system provides early detection for the false enforcement of the pointing and calling by recognizing the performance of the activity. We implemented a prototype system on an Android smartphone terminal to record occurrence of the performance, the place where it performed, and the performed time. The recognition accuracy showed the feasibility of recognition with a self-contained wearable computer system.

- Masahiro Iwasaki and Kaori Fujinami; “Pointing Gesture Recognition using Compressed Sensing for Training Data Reduction”, In the 1st International Workshop on Human Activity Sensing Corpus and its Applications (HASCA2013) (a Workshop of Ubicomp’13), Adj. Proc. of UbiComp’13, pp. 645-652, Zurich, Switzerland, September 8, 2013.

- Masahiro Iwasaki and Kaori Fujinami; “Recognition of Pointing and Calling for Remote Operatives Management”, In Adj. Proceedings of the 10th Asia-Pacific Conference on Human-Computer Interaction (APCHI2012), pp. 751-752, Matsue, August 29 2012 (poster).

- (Best Paper Award and IEEE Thailand Senior Project Contest 1st Prize) Masahiro Iwasaki and Kaori Fujinami; “Recognition of Pointing and Calling for Industrial Safety Management”, In Proceedings of the 2012 First ICT International Senior Project Conference and IEEE Thailand Senior Project Contest (ICT-ISPC2012), pp.50-53, Thailand, April 2012.

INCA: Interactive Context-aware System for Energy Efficient Living (2011.1-2012.12)

This work is conducted in cooperation with University of Oulu with the support by Japan Society for the Promotion of Science (JSPS) and Academy of Finland under the Japan-Finland Bilateral Core Program. The aim of this joint-project is to develop an interactive context-aware sensor-based feedback and control system to support energy efficient housing. The system is intended to self-motivate inhabitants to be aware of their energy consumption habits and this way to be able to decrease energy costs. A network of sensors will be installed to environment to perceive human context and the energy consumption of devices. Then, statistical machine learning algorithms will be developed to utilize the sensor data to recognize low-level human related contexts from the inhabitants. Different human computer interaction (HCI) techniques will be studied to build smooth feedback and control system through persuasive interface. For more detail on the project, click [here].

This work is conducted in cooperation with University of Oulu with the support by Japan Society for the Promotion of Science (JSPS) and Academy of Finland under the Japan-Finland Bilateral Core Program. The aim of this joint-project is to develop an interactive context-aware sensor-based feedback and control system to support energy efficient housing. The system is intended to self-motivate inhabitants to be aware of their energy consumption habits and this way to be able to decrease energy costs. A network of sensors will be installed to environment to perceive human context and the energy consumption of devices. Then, statistical machine learning algorithms will be developed to utilize the sensor data to recognize low-level human related contexts from the inhabitants. Different human computer interaction (HCI) techniques will be studied to build smooth feedback and control system through persuasive interface. For more detail on the project, click [here].

- Shota Yamashita and Kaori Fujinami; “Water Waste Detection and Activity Recognition at the Sink Towards Promoting Water Conservation”, In Proceedings of the 2020 IEEE 9th Global Conference on Consumer Electronics (GCCE 2020), October 2020. (poster)

- Kaori Fujinami, Shota Kagatsume, Satoshi Murata, Tuomo Alasalmi, Jaakko Suuatla, and Juha Röning, “An Augmented Refrigerator with the Awareness of Wasteful Electricity Usage”, International Journal of Internet, Broadcasting and Communication, Vol. 6, No. 1, pp. 1-4, February 2014. [link]

- Satoshi Murata, Shota Kagatsume, Hiroaki Taguchi, and Kaori Fujinami; “PerFridge: An Augmented Refrigerator that Detects and Presents Wasteful Usage for Eco-Persuasion”, In Proc. of the 10th IEEE/IFIP International Conference on Embedded and Ubiquitous Computing (EUC’12), Pafos, Cyprus, 6 December 2010.

- Hironori Nakajo, Keisuke Koike and Kaori Fujinami: “FPGA Acceleration with a Reconfigurable Android for the Future Embedded Devices,” In Proceedings of International Workshop on Performance, Applications, and Parallelism for Android and HTML5 (PAPAH), Italy 2012.

- Hironori Nakajo, Keisuke Koike, Atsushi Ohta, Kohta Ohshima, and Kaori Fujinami; “Reconfigurable Android with an FPGA Accelerator for the Future Embedded Devices”, To appear in Proc. of the 3rd Workshop on Ultra Performance and Dependable Acceleration Systems (UPDAS), December 2011.

- Trang Thuy Vu, Akifumi Sokan, Hironori Nakajo, Kaori Fujinami, Jaakko Suutala, Pekka Siirtola, Tuomo Alasalmi, Ari Pitkänen and Juha Röning; “Detecting Water Waste Activities for Water-Efficient Living”, In Proceedings of the 13th International Conference on Ubiquitous Computing (UbiComp2011) (poster), pp. 579-580, September 2011.

- Trang Thuy Vu, Akifumi Sokan, Hironori Nakajo, Kaori Fujinami, Jaakko Suutala, Pekka Siirtola, Tuomo Alasalmi, Ari Pitkänen and Juha Röning; “Feature Selection and Activity Recognition to Detect Water Waste from Water Tap Usage”, presented at the 1st International Workshop on Cyber-Physical Systems, Networks, and Applications (CPSNA’11), In Proceedings of the 17th IEEE International Conference on Embedded and Real-Time Computing Systems and Applications (RTCSA2011), Vol. II, pp. 138-141, August 2011.

- Hironori Nakajo, Kaori Fujinami, Kinya Fujita, Keiichi Kaneko, Konosuke Kawashima, Toshiyuki Kondo, Yoshiyuki Kotani, Yasue Mitsukura, Masaki Nakagawa, Takafumi Saito, Ikuko Shimizu and Matsuaki Terada; “Overview of the Symbio-Information Processing Project of TUAT”, In Proceedings of the 2011 International Joint Conference on Computer Science and Software Engineering(JCSSE), Ubiquitous Computing for Symbio-Information Processing (UCSIP) Workshop, pp. 414-419, May 1 2011.

- Hironori Nakajo and Kaori Fujinami; “An FPGA-based Accelerator of a Dalvik Virtual Machine for an Android Mobile and Embedded Processor”, In Proceedings of International Workshop on Innovative Architecture for Future

On-body Placement-aware Heatstroke Alert (2011.4-2015.3)

In Japan, various consumer products for heatstroke risk alert have been available on the market, in which Wet-Bulb Globe Temperature (WBGT) is utilized as a heat stress index. An issue in such a portable environmental measurement device is that the measurement might not be correct if the instrument is not outside, such as in the pocket due to the body temperature and sweat. The over-estimate from the incorrect measurement may lead to a user’s distrust due to frequent warning, while under-estimate might cause a severe damage on the user. To address the issue, we propose a trustworthy and effective heatstroke risk alert device that provides a user with possibility of over (under)-estimate based on a storing position on body as well as calibrated level of the risk. For this purpose, we also developed an external temperature and humidity sensing module that is attachable to an Android 3.0 or higher terminal via USB.

In Japan, various consumer products for heatstroke risk alert have been available on the market, in which Wet-Bulb Globe Temperature (WBGT) is utilized as a heat stress index. An issue in such a portable environmental measurement device is that the measurement might not be correct if the instrument is not outside, such as in the pocket due to the body temperature and sweat. The over-estimate from the incorrect measurement may lead to a user’s distrust due to frequent warning, while under-estimate might cause a severe damage on the user. To address the issue, we propose a trustworthy and effective heatstroke risk alert device that provides a user with possibility of over (under)-estimate based on a storing position on body as well as calibrated level of the risk. For this purpose, we also developed an external temperature and humidity sensing module that is attachable to an Android 3.0 or higher terminal via USB.

- Kaori Fujinami; “Smartphone-based environmental sensing using device location as meta data”, International Journal on Smart Sensing and Intelligent Systems, Vol. 9, No. 4, pp. 2257-2275, December 2016.

- Kaori Fujinami, Yuan Xue, Satoshi Murata and Shigeki Hosokawa; “A Human-Probe System that Considers On-body Position of a Mobile Phone with Sensors”, In Proc. of the 1st International Conference on Distributed, Ambient and Pervasive Interactions (DAPI2013), pp. 99-108, Las Vegas, USA, 24 July, 2013.

- Kaori Fujinami, Satoshi Kouchi, and Yuan Xue; “Design and Implementation of an On-body Placement-aware Smartphone”, In Proceedings of the 32nd International Conference on Distributed Computing Systems Workshops (presented in International Workshop on Sensing, Networking, and Computing with Smart Phones (PhoneCom 2012)), pp. 69-74, Macau, June 18, 2012.

- Yuan Xue, Shigeki Hosokawa, Satoshi Murata, Satoshi Kouchi and Kaori Fujinami; “An On-body Placement-aware Heatstroke Alert on a Smartphone”, In Proceedings of the 2012 International Conference on Digital Contents and Applications (DCA 2012), pp. 226-234, South Korea, 16 December 2012.

- Yuan Xue, Shigeki Hosokawa, Satoshi Murata, Satoshi Kouchi, and Kaori Fujinami; “A Trustworthy Heatstroke Risk Alert on a Smartphone”, In Adj. Proceedings of the 10th Asia-Pacific Conference on Human-Computer Interaction (APCHI2012), pp. 621-622, Matsue, August 29 2012 (demo).

Facilitating Bothersome Task by Affection for a Pet (2010.5-2012.3)

We propose a persuasive interface that leverages affection for a pet to start a bothersome task. Such task includes labeling dataset for supervised learning, tagging pictures taken by a digital camera, etc. A virtual pet “lives” in a wallpaper of a smartphone, changes its facial and body expressions, talks to a person based on a user’s context, which is designed to allow a person to have affection for it. The virtual pet asks a person to conduct a task, i.e. labeling, when a person finish utilizing an application. If he/she accepts the request, the pet express delights, while showing sad facial expression in case that he/she refuses the request.

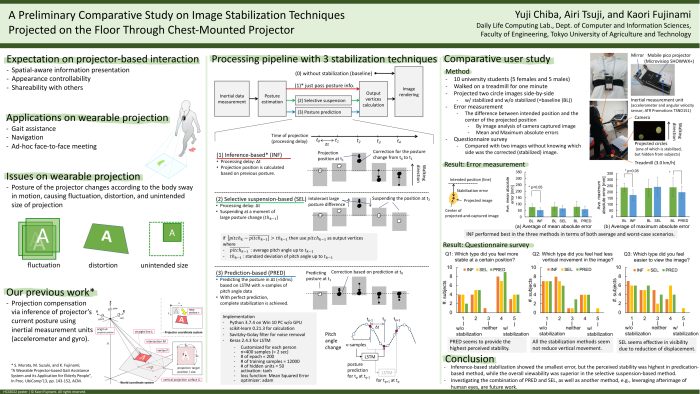

On-floor Image stabilization of wearable projection display (2010.7-2013.3)

Mobile projectors have come under the spotlight as a personal device for in- formation presentation due to the improvement of performance as well as the reduction in size and weight. The basic characteristics of a projector-based inter- action are: spatial-awareness, appearance controllability, and sharability, where a mobile projector enhances their values with “everywhere” nature. We consider that information presentation at an intended position with an intended size, i.e., spatial-awareness, is a really competitive characteristic among other modalities such as a smartphone/tablet and a Head- Mount Display (HMD); however, a stabilization of projected image is required for applications used in motion, e.g., walking, because a projector shakes with a body sway during moving and, accordingly a projected images on the projection surface (e.g., floor) swing. Such unstability of projected image not just affects the visibility, but also makes it difficult to present spatial information. We investigated image stabilization method that utilizes the projector’s posture to make corrected images to be presented at intended position and size without distortion.

- Yuji Chiba, Airi Tsuji, and Kaori Fujinami, “A Preliminary Comparative Study on Image Stabilization Techniques Projected on the Floor through Chest-mounted Projector”, In Proc. of HCII2022, June 26-July 1 2022. (online)

- Satoshi Murata and Kaori Fujinami; “A Stabilization Method of Projected Images for Wearable Projector Applications”, In Proceedings of the 13th International Conference on Ubiquitous Computing (UbiComp2011) (demo), pp. 469-470, September 2011.

- Satoshi Murata and Kaori Fujinami; “Stabilization of Projected Image for Wearable Walking Support System Using Pico-Projector”, presented at the 1st International Workshop on Cyber-Physical Systems, Networks, and Applications (CPSNA’11), In Proceedings of the 17th IEEE International Conference on Embedded and Real-Time Computing Systems and Applications (RTCSA2011), Vol. II, pp. 113-116, August 2011.

We also investigated an application of gait assistance for elderly people who need preventive care and patients with gait disorder using the image stabilization method. The system presents visual information for gait support to a user by a hip-mounted projector. The information is determined based on a user’s walking condition and physical function.

- Satoshi Murata, Masanori Suzuki, and Kaori Fujinami; “A Wearable Projector-based Gait Assistance System and its Application for Elderly People”, In Proceedings of the 2013 ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp’13), pp. 143-152, Zurich, Switzerland, September 10. 2013.

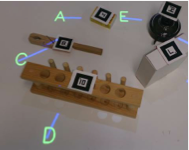

ost4ce: On-site Safety Training for Chemistry Experiments (2009.4-2012.3, 2012.4-2015.3, 2015.4-2018.3)

In Japan, safety training for a chemistry course is basically done at the beginning of a semester as a classroom lecture, using materials such as videos and textbooks. This particular training style may generate gaps between the safety procedures being learned and actual practice. This disparity may impair the effect of safety training and subsequently result in failure of preventing accidents. We propose a tangible learning system that displays a message regarding possible accidents. One of the most important design issues is making students independent of the system. More specifically, if a safety-training system is too suggestive all of the time, it would certainly be helpful for a student during the course of an experiment, but it would deprive him or her of the opportunity to learn to avoid accidents. Keeping this balance between learning safety measures and being safe is an important issue.

In Japan, safety training for a chemistry course is basically done at the beginning of a semester as a classroom lecture, using materials such as videos and textbooks. This particular training style may generate gaps between the safety procedures being learned and actual practice. This disparity may impair the effect of safety training and subsequently result in failure of preventing accidents. We propose a tangible learning system that displays a message regarding possible accidents. One of the most important design issues is making students independent of the system. More specifically, if a safety-training system is too suggestive all of the time, it would certainly be helpful for a student during the course of an experiment, but it would deprive him or her of the opportunity to learn to avoid accidents. Keeping this balance between learning safety measures and being safe is an important issue.

- Kaori Ito, Hiroaki Taguchi and Kaori Fujinami; “Posing Questions during Experimental Operations for Safety Training in University Chemistry Experiments”, International Journal of Multimedia and Ubiquitous Engineering (IJMUE), Vol. 9, No. 3, pp. 51-62, 2014.3.

- Kaori Ito, Hiroaki Taguchi, and Kaori Fujinami; “A Preliminary Study on Posing Questions during Operations for Safety Training in Chemistry Experiments”, In Proc. of International Workshop on Multimedia 2013, December 13, 2013.

- Kaori Fujinami, Nobuhiro Inagawa, Kosuke Nishio and Akifumi Sokan; “A Middleware for a Tabletop Procedure-aware Information Display”, Multimedia Tools and Applications, Vol. 57, Issue 2, Springer, 2012.

- Kaori Fujinami and Akifumi Sokan; “Nondirective Information Presentation for On-site Safety Training in Chemistry Experiments”, In Proceedings of ACM International Working Conference on Advanced Visual Interfaces (AVI 2012), pp. 308-311,Italy, May 2012.

- Akifumi Sokan, Ming Wei Hou, Norihide Shinagawa, Hironori Egi and Kaori Fujinami; “A Tangible Experiment Support System with Presentation Ambiguity for Safe and Independent Chemistry Experiments”, Journal of Ambient Intelligence and Humanized Computing, Springer, November 2011. (Online First)

- Kaori Fujinami; “A Case Study on Information Presentation to Increase Awareness of Walking Exercise in Everyday Life”, International Journal of Smart Home, Vol. 4, No. 2, pp. 11-26, Science & Engineering Research Support Center (SERSC), 2011.

- Akifumi Sokan, Hironori Egi and Kaori Fujinami; “Spatial Connectedness of Information Presentation for Safety Training in Chemistry Experiments”, In Proc. of ACM International Conference on Interactive Tabletops and Surfaces 2011 (ITS2011) (poster), pp. 252-253, November 2011.

- Kaori Fujinami; “Effects of Multiple Interpretations towards Nondirective Support Systems”, Symposium on Interaction with Smart Artifacts, Bilateral DFG-Symposium between Japan and Germany,March 7-9, 2011, Tokyo, Japan.

- Kaori Fujinami, Nobuhiro Inagawa, Kosuke Nishio and Akifumi Sokan; “A Middleware for a Tabletop Procedure-aware Information Display”, In Proceedings of the 2010 International Workshop on Advanced Future Multlmedia Services (AFMS2010), 9-11 December, 2010.

- Akifumi Sokan, Nobuhiro Inagawa, Kosuke Nishijo, Norihide Shinagawa, Hironori Egi and Kaori Fujinami; “Alerting Accidents with Ambiguity: A Tangible Tabletop Application for Safe and Independent Chemistry Experiments”, In Proceedings of the 7th International Conference on Ubiquitous Intelligence and Computing (UIC2010), LNCS 6406, pp. 151-166, October 2010.

- Akifumi Sokan and Kaori Fujinami; “A-CUBE: A Tangible Tabletop Application to Support Safe and Independent Chemistry Experiments”, video demonstrated at the 7th International Conference on Ubiquitous Intelligence and Computing (UIC2010), pp. 490-493, October 2010.

- Kaori Fujinami; “Weaving Information into Daily Activities to Maximize the Effects”, Visiting talk at School of Informatics, University of Edinburgh, September 7, 2010.

- Kaori Fujinami, Akifumi Sokan, Nobuhiro Inagawa, Kosuke Nishijo, Norihide Shinagawa and Hironori Egi; “A Preliminary Experiment on Ambiguous Presentation for Safe and Independent Chemistry Experiments”, Late Breaking Results Session in the 8th International Conference on Pervasive Computing (Pervasive2010), May 2010.

- Kaori Fujinami; Augmented Chemistry Experiment – A Smart Space beyond Safety at a Moment, The 3rd Asia-European Workshop on Ubiquitous Computing (AEWUC’10), May 2010.

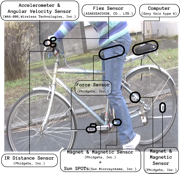

Augmented Bike for Safe and Effective Notification (2009.4-2013.3)

We are investigating a method to provide a notification to a bicycle rider in safe and effective ways. A navigation system for a bicycle and a mobile phone can interrupt into a rider no matter how he or she is riding a bike, which would make him or her unsafe. Our idea is to determine an appropriate timing of the presentation based on the state of riding, e.g. speed, balancing, seating. Our method might intentionally delay the notification of an incoming e-mail message to avoid unsafe allocation of cognitive load when the speed of a bike is high, for example. Also, the modality of notification could be determined in the same manner. Currently, we are developing a sensor/actuator-rich bicycle to collect data and find relationship among a state of riding, the effectiveness of notification and the safety of interruption.